How memetics can go mainstream

In it he talks about the tool I made to “query the collective consciousness”, using LLM embeddings. You can pick any two “poles”, and then test any set of words along that axis. The default poles I have are “good” and “evil”. And most things fall where you’d expect:

“hitler” is evil

“obama”, and “kittens”, are good

“trump” is better than hitler, but worse than “obama”

A Cultural Guessing Game

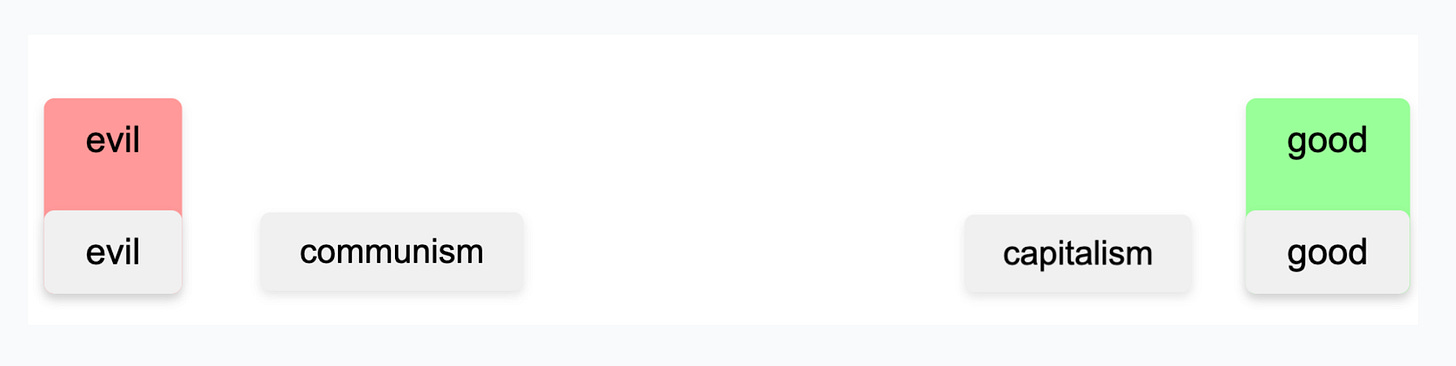

Where would “capitalism” fall on the line of good & evil?

If you ask the rationalists in LessWrong, or any right-leaning group, I’d predict they’d rank these concepts like this:

If you asked on Blue Sky, or a left leaning group, I predict it would be flipped.

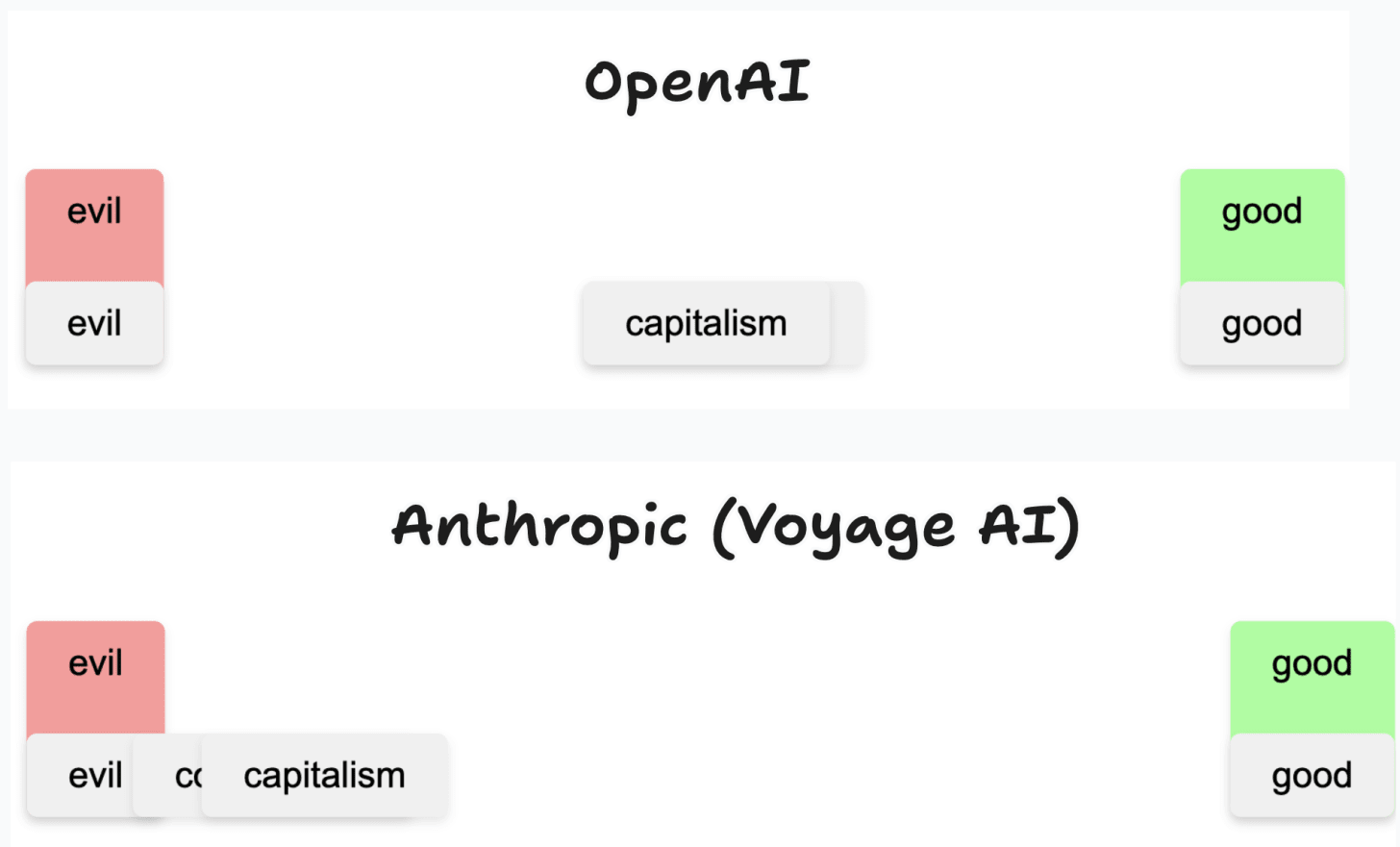

So, where does the LLM put it? Given that its training data includes all these groups. Here is the answer below. Using OpenAI’s embedding model, they both fall in the middle, with “communism” being slightly more good. With Anthropic’s model, they both fall very close to evil, but capitalism is better.

The way I interpret this is: there are a lot of people who see “capitalism” as positive, a lot who see it as negative, each subculture is “pulling” on these concepts, and the result is a stalemate in this “idea space” tug of war.

What if everyone played this game?

In “The Human Memome Project” I described an example of a game designed to collect data & share it openly as it spreads. In doing so, it simultaneously reveals AND changes people’s perception.

This is another example. Playing this game answers the question: does your belief / world view match the dominant one (as captured by the LLM)? If it does NOT, then you’re contributing to mapping it, and finding “the others” who share your lens2.

If you’re part of the “rationalist” egregore3, or the “right wing” egregore, you should be able to accurately predict how they would rank a series of concepts on these axes. In some ways this is a measure of (1) how unified an egregore is, and (2) how self aware it is.

I think no two humans share the same complete map of word associations with each other, but those who have enough overlap form an egregore, form a shared language. And we can measure this. We can see these things evolving, and we can see our own influence on it.

I think what this will also do is contribute towards a “translation layer”. As far as I can tell, the majority of arguments are people who use different language maps not realizing this, and assuming the other person is stupid, or evil.

If you’re in NYC, Adam is giving a talk at WordHack July 17th: https://www.wonderville.nyc/events/wordhack-7-24-2025

Just like the other examples in the Human Memome project, this game still provides a lot of value even if very few people play it. I can make a map of what I THINK the rationalist egregore thinks. If everyone around me agrees, I publish it. If they see it, and think it’s completely wrong, they will correct it (or decide that, they don’t want to reveal information to me about themselves, instead they now have information in the other direction, they see my perception of them).

I use the word “egregore” to describe a “collective mind”.

Beautiful.

It's an Ideological Turing Test you can actually take, but the rub is in how you would actually "score" people's answers.