Update: I made a 20 min video walking through this pitch, including how a version of this semantic engine does already exist today, and how these semantic embeddings work to navigate “idea space”.

I’ve been thinking a lot about a new category of organization that I call the “Open Research Institute”. I’m writing this to find the collaborators that can help me build it. Or if someone is already building it: consider this my application to join.

In a nutshell, ORI is:

A “meta organization”, which means a (1) network of people & (2) a cultural pattern, more than a specific corporation or piece of software

You “apply” by putting your work anywhere on the internet with a timestamp

A semantic engine maps your ideas in concept space

A useful contribution, or prediction, is itself “proof of work”. You are rewarded upon delivery of value, with either status or money

I think ORI already exists in a way, it’s something I stumbled on more than invented. My ability to navigate it is how I got started as an independent researcher, apprenticed under some of the smartest minds on earth, made useful contributions, got recognized & awarded with status, which I then converted to money.

To demonstrate the potential of ORI, let’s walk through an example.

How to launch a new scientific paradigm

Say you’re a grad student at a university, studying computational psychology. You have big, bold, brilliant ideas about consciousness that don’t fit the existing paradigm you’re working in. What do you do?

You start writing about your work on the internet, on Substack, twitter, or your personal blog. Wherever you write, you index it in a semantic engine like ORI’s, and it automatically starts to consume what you write.

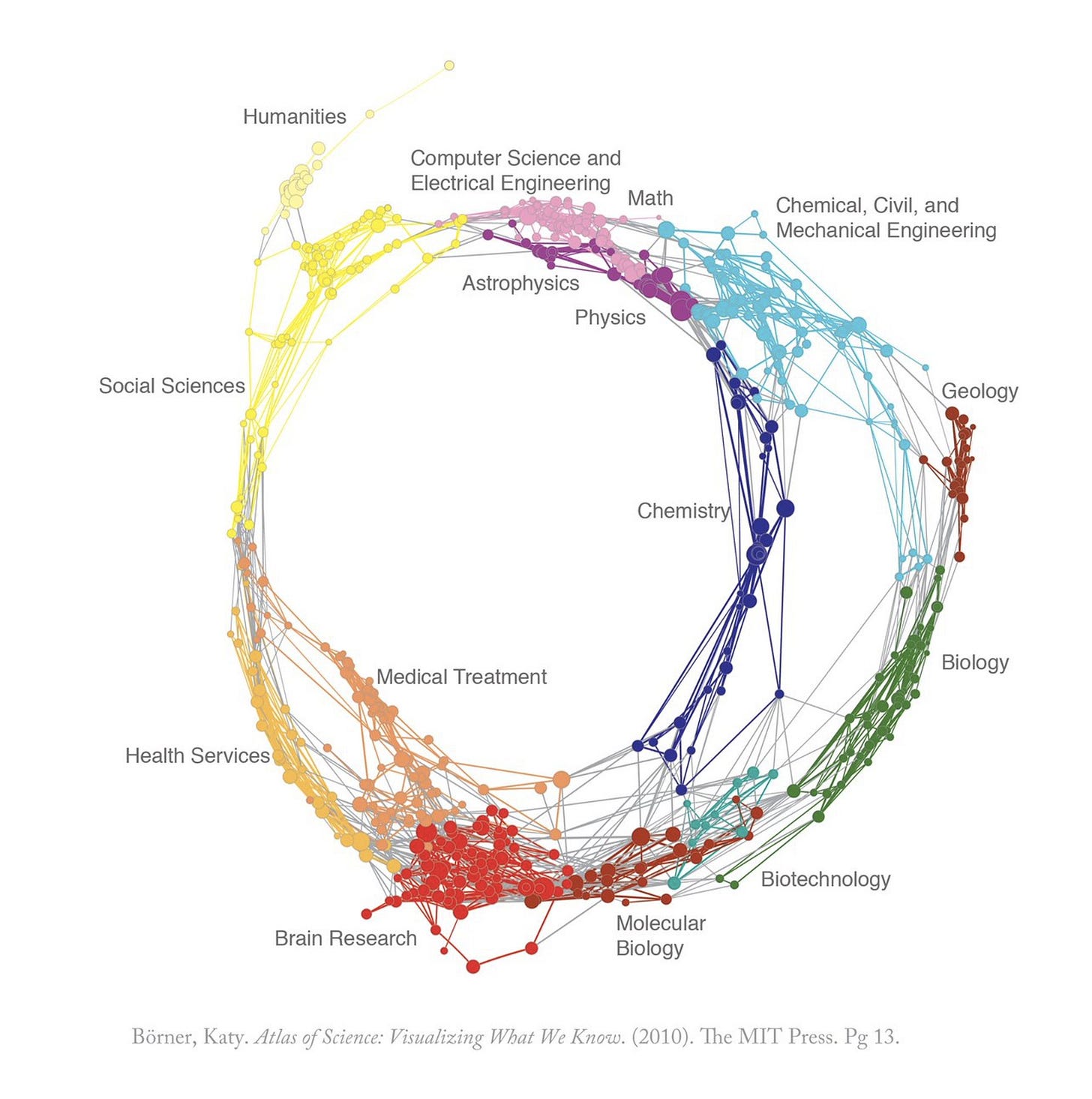

This gives us an evolving map of concept space as shown below. Each document (tweet thread, or blog post) is a dot on the map. The color here corresponds to the author.

In this story, you are the orange author 🟠. A lot of your writing falls into the center cluster, that’s where people writing about consciousness find each other.

As you develop your novel theories, you find yourself straying, forming a new “island in conceptual space”. If your ideas are interesting, they start to attract others who want to contribute. Once you get a critical mass of people, you organize meetups, start doing experiments & gathering data. Any early success in predictive power attracts more people & funding. Eventually you start making predictions that the mainstream paradigm fails to make. You establish a new research institute to further develop this new science you’ve pioneered, maybe you call it something like the Qualia Research Institute.

The story above is fictional, because this semantic engine I describe doesn’t yet exist. But what unfolded in this story true as far as I understand how Andrés Gómez-Emilsson founded QRI. He found something new that didn’t fit, found the others, and it paid off.

I see a similar thing happening right now in the newly emerging paradigm of memetics, where I tried to simulate the semantic engine by writing this “memetics 101” essay and listing all the people I’ve found so far and where their work is relative to each other.

The semantic engine guarantees credit & fosters collaboration

The most important thing about the semantic engine is its ability to “rewind time”. The first people to stumble on something truly novel are always met with criticism, like “this is psuedoscience”, “if this was really groundbreaking, someone else would have noticed it by now” etc. When it DOES deliver, we want to see who called it early, because investors want to fund those people, and smart competent people want to flock to these scenes to contribute to the next big thing.

This is a really important principle in ORI: resources flow towards those who demonstrate effective use of those resources. If you make effective use of capital, you get more capital. The missing thing that I am trying to contribute to ORI here is: if you make effective use of attention, you get more attention.

I prototyped this using prediction markets. I made a market that said “does this author have a genuinely novel grand unifying theory?”, because I was confident what he had was going to be big news once it spread, and I wanted to call it early. What happened was:

a lot of people reviewed it thoroughly, as a result of me making this market

most decided it was NOT groundbreaking

very quickly the market was 95% “no”, so others stopped looking/reviewing

this failure was reflected in my prediction score on Manifold

Consider if I had found something genuinely novel, and everyone who read it was like, “wait this really IS going to change everything”. The odds on the market would reflect that. A market that says “this is a groundbreaking new theory” where the odds aren’t “99% no” would very quickly attract more attention, and more reviewers. If it survives that attention, it would continue to spread.

I already have another candidate I want to make a prediction market for (blowtorch theory). Say my prediction market is “Will Scott Alexander endorse this theory once he sees it?”. Making the market acts as an initial filter. If it’s bullshit, Scott doesn’t need to bother, the community will unanimously vote on that. If it does reach the notable person, and he does endorse it, that awards me with status. Next time I say, “big important piece of information!” people are likely to listen. And vice versa if it gets reviewed and dismissed.

Good use of attention up the chain of society is rewarded. Bad use of it is punished. This system creates “sustainable marketing pathways”, a kind of information highway.

Being scooped is a feature, not a bug

When you put your brilliant idea publicly on the internet, there’s a chance that you might get scooped. Someone with more resources than you might see it, execute it, and reap the rewards.

Consider flipping that story. What happened there is that you made a really good proposal, it was accepted, implemented, and succeeded. You are a product manager for civilization. If you are good at this game, you can keep doing this, gaining status & recognition every time you get a success. You will have teams of people racing to build what you design to reap the rewards, and even wanting to pay you for early access to your designs.

You are either the best person to execute your vision, or someone else is. Either way, the fastest way for this thing to exist is to broadcast it. You will either get the resources to build it or find those who can. If the outcome of the thing you’re building improves your life, then you win either way.

This is true of ORI itself. I know there’s a lot of money to be made here, and it would be great if I can be the founder and make a bunch of money. But if I cannot execute, I’d rather someone else does. Because then I’ll be a member of ORI, I’ll put my work into it, I’ll make novel contributions, and I will be rewarded for it. That’s what ORI does, and it doesn’t matter to me who runs it.

ORI rewards those who make good use of resources, by giving them more resources. Money is one resource. Status is another. If you attach your reputation to novel breakthroughs and it pays off, you are rewarded with more status, which you can use to keep moving things through the network more efficiently. Peer review doubles as marketing.

Finding universal truth, invariant patterns across fields of knowledge

This is the last thing I’ll mention as a foundational principle of ORI. It’s the most important, but also the most esoteric/at the edge of my knowledge. I’ll describe it here in language that should make sense to those who already see the vision.

This was the tweet that inspired me to start working on ORI: “the answer to every scientific question in your field is in another”:

I strongly believe in “consilience”, in the unity of all scientific fields. That there are patterns that we will find hold true across all of them. That in fact, seeing these fields as separate is the current bottleneck to major breakthroughs.

When you look across many scientific fields, and see the same pattern holding, what you’ve found is a “universal truth”, a metaphysical law of nature. You can take what you learned from studying chemistry, and use it to make predictions on dynamics of sociology. Knowledge of consciousness can give you predictive power over observations in cosmology. Religion studies can inform AI alignment.

This all sounds crazy, but it’s perfectly reasonable if you consider that there are universal laws at a “lower level” of reality. And once you find the ways in which everything connects, you get much simpler theories that have way better predictive power than a lot of complex, incompatible theories.

This is the image I have in my mind when I think of this process of going from complex models & lots of data, to much simpler models AND better prediction:

I think once we find a universal “metaphysical theory of reality”, it will be an enormous, historic breakthrough for humanity, and the process starts over again. To push through towards more accurate predictions, we split off into new scientific fields, new categories, edging the circle of the frontier of knowledge forward, until you see enough pieces of it to unite again. And on & on recursively.

I *think* this is how you bootstrap superintelligence, towards the best possible future: where human minds & hearts (intuition) play the role of the “right brain” in the cognition of a superorganism, and intelligent machines play the role of a “left brain”.

ORI is where the frontier of metaphysics always is, by definition. It can always be found at the highest level of coordination currently possible. I think there’s an entire category of “coordination-gated-knowledge” like this.

What pieces of ORI already exist?

Ok, phew. That’s the big vision. Now let me show what I’ve already got so far and what I think the next steps are.

(1) internet wide semantic search

Exa (https://exa.ai/) already has a product that does this. You can write your blog post / manifesto, throw it into this semantic search that indexes the web, and finds the others.

To test this, I took emergent’s research manifesto as the search query, and it found speakerjohnnash’s “purple pill” manifesto. This was exciting because these are two people who are pioneers in memetics, and they didn’t know each other! So this is the system working as expected (1) finding the pioneers (2) helping them find each other.

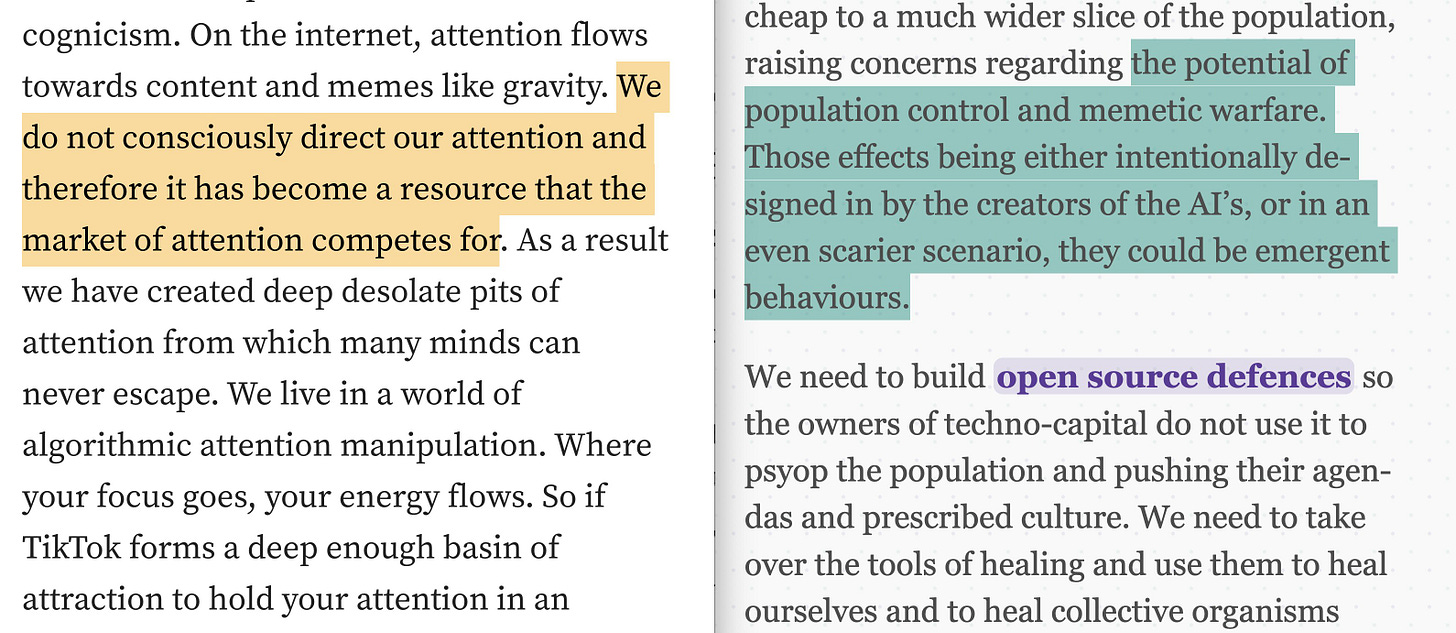

In their manifestos, they both talk about the dangers of “memetic warfare” and “feedback loops of attention basins”:

I want the ORI core product to have a way to do this kind of “internet wide” search, against your body of work, maybe once every 24 hours. So if you write something years ago in your personal blog, but someone on LessWrong just had an epiphany about it yesterday, you’ll find each other.

We also probably want to filter by “who is in your network”. Like maybe you want to constrict the search to only include “people I follow on twitter / substack / bluesky”.

(2) Make an MVP out of a network of open research notebooks

I don’t want to build another platform that everyone has to switch to. I want to build ORI on top of existing networks. All we need is a way to ingest standard protocols like RSS feeds, then you can be part of this network even if you primarily post on substack, or bluesky, or mastodon. We can get twitter too through projects that scrape your own tweets and publish them to an open API.

If people don’t have a preference, I like the idea of telling people to make an open research notebook using Obsidian, self hosted on GitHub pages (I wrote a tutorial on that here, no coding required).

Imagine writing esoteric notes at the frontier of your knowledge in your little notebook, and getting an email from a fund like Analogue saying “your insights helped us make important breakthroughs, we’d like to fund you to keep going, no strings attached” (this is essentially what happened to me, tweeting about memetics).

(3) Who else is working on this

Cosmik (Ronen Tamari) is creating a social media platform for research, that will be a “meta network” (consuming content from blue sky, twitter, etc)

Cosmik's semantic posts act as knowledge legos, allowing researchers to express ideas in structured, machine-readable formats. Our AI assistant seamlessly converts regular posts into semantic posts, powering Hyperfeed—a next-generation research discovery system

Ronen’s bio mentions “Nanopub” which appears to be a way of defining a standard for citing a “unit of information”. This is something we still have to solve in ORI, because semantic similarity is not enough.1

See also, HexaField on twitter is “mapping out this ecosystem”, says there are more that he’s trying to keep track of.

Discourse Graphs (Joel Chan, https://x.com/JoelChan86)

Discourse Graphs are a decentralized knowledge exchange protocol designed to be implemented and owned by researchers — rather than publishers — to share results at all stages of the scientific process.

Discourse Graphs are client-agnostic with decentralized push-pull storage & and can be implemented in any networked notebook software (Roam, Notion, Obsidian, etc.), allowing researchers to collaborate widely while using the tool of their choice.

I am most excited about this because they already have a few academic labs using this, and it fits my vision of not requiring signing up to another platform, you bring the network to where people are.

Mycelial Institute (by mykola, vixamechana, & others)

No online presence yet. They embody the “network of people” + the “cultural pattern”. It’s a mix of people across academia / industry / many different fields who form a collective. Talking with Myk was very exciting because he independently deduced my entire vision of ORI. It’s called “mycelial institute” because the network grows decentralized across existing institutions & corporations, and all who wish to collaborate in good faith are welcome. Those who collaborate continue to grow & thrive & outcompete closed networks.

Tianmu (https://tianmu.org/)

Similar to Mycelial Institute in that they started with the network of people & the cultural pattern. They are very smart people and are at the frontier of metaphysics as far as I can tell. See: “The Mathematical Cosmology of Tianmu”.

Note: when I say someone is “at the frontier”, I can only discern that they have surpassed me. I have no ability to judge beyond what I can recognize. What I can do is follow those people, and see how they respond to each other & integrate their insights to accelerate my own model of reality.

(4) What I’m working on now

Volky has been working on the semantic engine here: https://github.com/Open-Research-Institute/semantic-engine. The core infrastructure we’re trying to build is (1) an engine that can take RSS feeds & arbitrary text (2) an infinite canvas viewer, and a timeline view (to see the evolution of an idea in a given corpus). This will give us a base prototype that you can throw stuff into. This should allow us to take a community of people writing on subtack + twitter + Obsidian and demonstrate immediate feedback loops of “finding your peers” and connecting as you write.

For example, here’s someone I found today who has an essay on AI alignment that I think articulates a novel theory, the closest match I know of is Emmet Shear’s new alignment lab. This person doesn’t want fame as much as to find someone who understands, for feedback or validation.

I want to experiment with deriving data out of the free form writing. I tweeted the other day that a lot of smart people seem to be reaching a very specific conclusion, that “consciousness is a fundamental building block of reality” (as opposed to consciousness being generated by a brain).

Now what I want here is to see how widespread this belief is amongst the substacks I read, podcasts I follow, books on my reading list. Nathan Young demo’d this tool a while back for taking a specific question in AI policy/safety debate, and visualizing the spread of opinions on it. I want to do that but dynamically, for any question. And then to dig further (like, for all the people who take consciousness as fundamental, do they address <insert materialist counter-claim here>).

Once we have a prototype that can do this, I think it will start to create feedback loops that change the way people write, and this will validate if we’re on the right track.

I think you can let people write in free form and use semantic transformations on top. Like, instead of having people write in a specific format to clarify their assertions, I can just take someone’s substack/body of work and say “how does he define consciousness?” / “do they place consciousness as a fundamental property of reality?” and sort by that. It doesn’t need to be perfect.

Consilience is science, not consensus which is political.

The term consilience is new to me, but I'm also a believer. I've been exploring the concept through the lens of systems science for the past couple of years, and am now taking a break from writing to flesh out my plans for a research org focused on advancing systems research.

Very inspired by your approach to building an Open Research Institute, I'll definitely be incorporating some of your ideas and research methods!

https://systemexplorers.substack.com/p/what-is-systems-science