Discernment is the bottleneck

It sounds like you’re saying the truth is: sometimes scientific institutions are wrong, and the anti-science cranks are right.

But you’re also saying that we might NOT want to spread this truth, because it will “break” people’s world models, which might make things worse?

This seems like a contradiction. Why protect a false world model?

(source) - The relevant essays are “Our Story So Far” & “How Hank Green is Contributing to the Memome Project”

My answer is: I want to evolve each world model towards the truth, INSTEAD of demolishing it & replacing it with a better one.

Why? Because multiple competing world models evolving towards truth have less blindspots than a single homogenous one.

Let me explain what I mean when I say it’s “dangerous” to break people’s world models, even if you are well intentioned and trying to give them the truth.

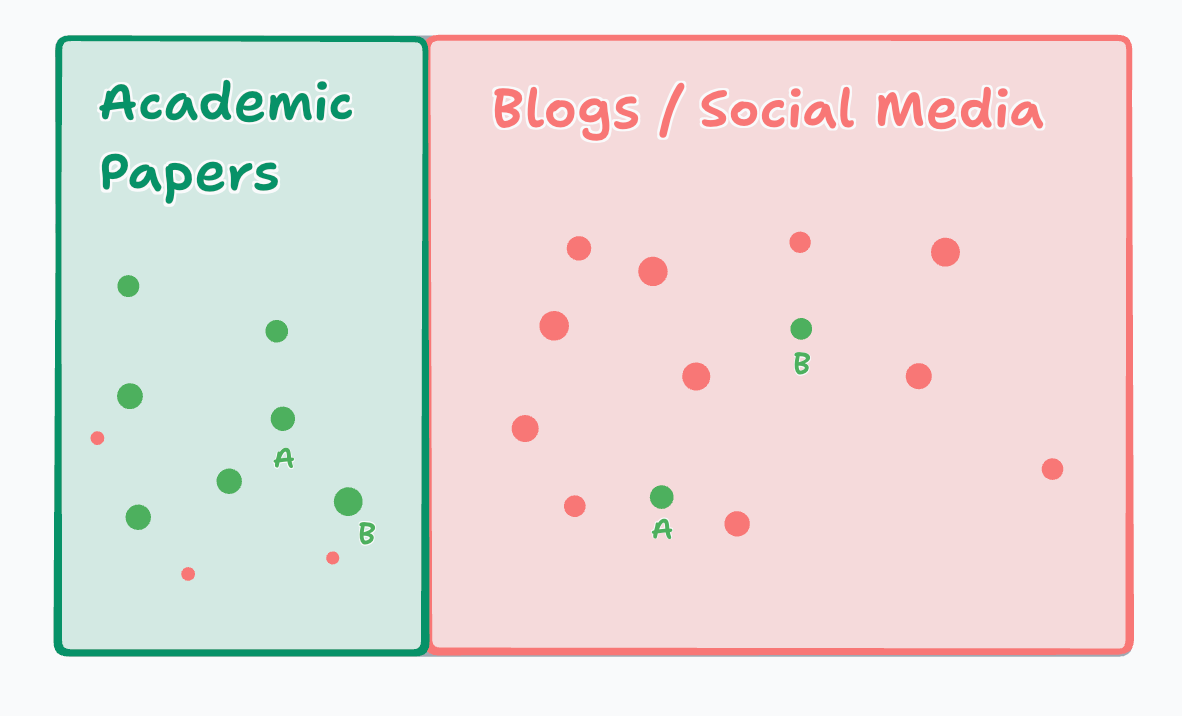

Here’s what the worldview looks like for someone who fully trusts science instutitons. Each dot is a piece of information. 🟢 Green = good & true, 🔴 red = false / fraud.

The way they tell that something is good & true is based on where it comes from, in what part of the diagram it lands. They also know that there does exist “good & true” things on the right side, but if they really are good & true, they will be found on the left side. Therefore, there is NO reason to ever look at the right side for truth1.

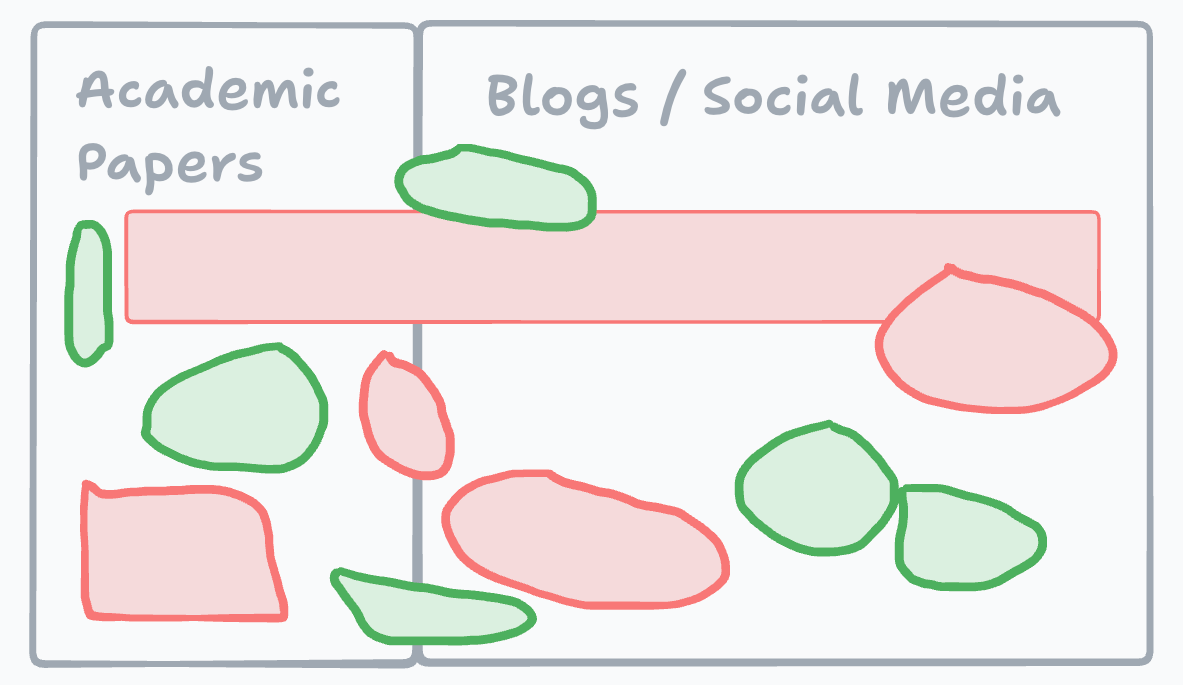

Now, my worldview splits it more like this:

This is what I meant by:

By 2025, some blog posts had far greater rigor, integrity, and predictive power than some academic papers. But no one could easily tell these apart, and most overcorrected in one direction or another.

From: “Our Story So Far”

The idea that “some blog posts are true, and some academic papers are false” is not a difficult or controversial statement. The actual hard question is: which ones?

We can now see what happens inside the mind of someone whose world view you are trying to update. When you go & valiantly point out to them how much fraud or incompetence there is in science, this is what happens:

IF you manage to succeed, they DON’T update to the more nuanced “it’s complicated” worldview. All you’ve done is shown them that the one safe corner on the map is NOT safe. The whole thing appears red, and untrustworthy2.

This is a very bleak worldview to live with. It’s also NOT true. Thank god that humans have a natural immune response against updating their worldview in the face of such misguided attempts3.

If you understood all the pieces above, I expect you to be feeling very good right now about humanity’s potential to find truth (if that’s not the case, leave a comment!)

When someone resists truth, it’s often because we’re making things worse. Feature, not a bug. Understanding the source of the resistance will allow you to spread truth much more easily4.

If you’re speaking to someone inside of your worldview, your goal is to increase the discernment of your shared worldview.

If you’re speaking to someone across worldviews, your goal is to either (1) convert them, or (2) extract information from their worldview, that you can use to improve the discernment of your own. Or vice versa5.

Ultimately, the most accurate truth, the best predictions, will come from the aggregate of all the worldviews. In machine learning we call this “ensemble learning”.

They also likely acknowledge that there is some things that are NOT true within the left side, but there is nothing to be done about that. This way of slicing is the best they got.

Same thing happens when a left wing person is exposed to evidence that left wing ideology has many done evil things. If they accept it, it doesn’t mean that “the good people are actually on the other side”, what it means is, “it seems that EVERYONE is evil, there is no good anywhere to be found” which is a worse position to be in (and not true).

I saw a great writeup on this recently called “Why Facts Don’t Change Minds in the Culture Wars” where they describe exactly this process of a worldview crumbling, and our natural immune response to it as a feature, not a bug:

“When you challenge a core idea, you’re not just questioning a single point; you’re tugging at the beams and arches of the entire cathedral”

See the “Push vs Pull” principle of open memetics.

This is what I mean when I say “culture war turns into culture science”.

One thing that people who study urban legends point out is that even if the facts are false, the legend represents a fundamental fear (or sometimes hope).

This points to another fundamental truth: the main purpose of language and communication is to express emotion - facts are secondary. I learned this from having grown up with dogs. Dogs have a rich language. It is as much a body language of posture as it is of growls, barks and howls. But it is mostly comprised of expressing what they are feeling.

I was sitting in the passenger seat of the car when I was 11, with our West Highland White Terrier, Jock, sleeping at my feet. I knelt down to pat him. He didn't want to be patted. He bit my wrist - just two canine teeth through the flesh - glared at me, then closed his eyes again. I got the message.

Dad didn't see it that way. He stopped the car, gave Jock a good thrashing, staunched the blood, then drove to the doctor for a rabies shot. Sheesh! Can't a dog just be left to have a nap?

Jock left our house soon after, since I was the only kid he could tolerate. Jock hated human children, except me. I still have the scar, though.

To get back to the main point, if you say something that challenges a person's fundamental model, the emotional subtext is that they are sleeping through life, with the illusions of safety in their fantasies. The result is that they are liable to bite.

I'm guessing this is why agreeing with someone on, say, minimum wage and asking about the consequences is more successful than pointing out facts about its consequences.

"Okay, let's do it. Why not $100/hr?"