Geoffrey Hinton on developing your own framework for understanding reality

Bad news: nobody can teach you this. Good news: you can do it on your own! It is, in fact, the only way. This is the story of how I learned to think for myself.

I love this clip of Geoffrey Hinton describing people who have “good intuition”. I think what he’s talking about is less about intuition and more about “people who have good epistemics”. People who have faith in their ability to find truth.

I think it’s very similar to developing your own taste. People with refined/discerning taste don’t need someone else to tell them whether a piece of art is good or not. They can tell for themselves whether listening to a piece of music makes them feel something. They can see the answer for themselves.

I think Hinton is describing the same thing, but for the process of truth finding.

(here’s the clip, with full text below)

I think it's partly they don't stand for nonsense.

Here's a way to get bad intuitions: believe everything you're told. That's fatal.

Some people have a whole framework for understanding reality and when someone tells them something, they try and sort of figure out how that fits into their framework. If it doesn't, they just reject it. That's a very good strategy. On the other hand, people who try and incorporate whatever they're told end up with a framework that's sort of very fuzzy and sort of can believe everything and that's useless.

So I think having a strong view of the world and trying to manipulate incoming facts to fit in with your view, obviously it can lead you into deep religious belief and fatal flaws and so on. Like my belief in Boltzmann machines. But I think that's the way to go.

If you've got good intuitions you should trust them. If you've got bad intuitions, it doesn't matter what you do, so you might as well trust them!

(from this interview, at 42:00)

This is so important to me because (1) I made it to 30 years old, and I never had this. His description 100% applied to me about “people who believe everything [or don’t know what to believe], and their understanding of the world is fuzzy and useless” (2) This changed over the last ~6 months.

I’m going to spend the rest of this post reflecting on what I think lead to this (with a final section on “the dangers of thinking for yourself” that can be read on its own)

It’s an incredible shift that has really completely changed how I see & interact with the world. It felt almost kind of sudden and you can see me confused & grappling with this in my tweets. Here’s one (incorrect) theory I had in March about the cause:

The change was much bigger than understanding people. What I now had was a way to test new facts about the world before I let them alter my beliefs.

Here is a concrete way to think about it: imagine a beginner writing code. They write code, and sometimes it works, and sometimes it does not. They can’t quite tell why the same piece of code sometimes works and sometimes doesn’t (for example, because they copied the snippet into the wrong scope). The expert tells them the right answer, and they follow it, even if it doesn’t make sense to them, and they see the expert was right. They learn to trust others’ judgement over their own.

This is not good. The concrete proof this person now has is: even if you think something is incorrect, you should do it anyway, as long as someone who looks smarter says it. They learn that this is a practical, successful strategy for problem solving.

I wasn’t like that when writing code, but I was 100% this with everything outside of code (politics, history, management, religion, society, and people).

I had very good epistemics when it came to engineering. I didn’t need anyone to tell me if my code was right or not, I could just test it. I trusted no one above the compiler. My epiphany was seeing how this same process could apply to all aspects of life.

How I found my framework for truth

I started trying to predict things, and paid attention to feedback.

I think this is pretty much it. Try to predict and get feedback from reality. I think doing this over and over leads to a few things:

It forces me to create a mental model

(even if I only have one data point, it forces me to extrapolate a line. Even if I have NO data, it forces me to articulate my priors. So, I can ALWAYS come up with a model)

I practice updating my model

(the continuous feedback is critical. It becomes kind of a game: every time I make an incorrect prediction, it feels like progress, not a failure. It’s a clue about what a better model’s output should look like)

I develop an internal source of discernment

(just like riding a bike, or any process that comes with immediate feedback, I can tell for myself if I’m doing well. It’s simply whether my predictions are getting better or not. This allows me to be totally open to advice/models from experts, but I assign my own level of confidence to it based on its predictive power in my everyday life)

part I

I started doing this with politics, with an anonymous Twitter account. The model I had was that “there are so many good things we can do to improve the world, but we aren’t doing it because [the other political paty sucks and hates women and poor people]”. I needed to have space to ask a lot of extremely dumb questions, and for people to take it seriously.

My source of feedback was whether I could predict people’s responses to criticism of their ideology (in other words: do I understand the position well enough to successfully argue it). I’d find random twitter arguments and go deep into the comments. I focused on smaller accounts, ones that seemed to be tweeting earnestly and not for an audience or to sell something.

It was useful to predict adjacent things like: when someone would stop engaging and say “you’re clearly arguing in bad faith/you suck” (like: what do they consider bad faith). I’d then go in and try to repeat back one side’s argument: “are you saying so & so, and the other guy is wrong because he’s not considering xyz?”

If they said, “yeah, you get it!! not like that other guy” that was a win.

part II

I didn’t know that “prediction/model building” is what I was doing at the time. It took me by surprise that I could generate brand new insights when I listened to the news. I’d hear an argument on the radio and I’d think, “wait, that’s actually NOT what the other side is arguing! huh! I know how they would respond to this!” and I’d go on Twitter and I’d confirm I was right!

(this worked the other way too, where I would see people arguing vehemently on Twitter, but there is a very clear answer or point that I heard on the radio that no one was bringing up, and when I brought it up, people actually changed their minds, or at least, admitted they genuinely didn’t realize that, and that made sense to them)

There were a few weeks where I was feverishly writing down every new insight I got like this. It was so exciting because, I’d basically never had an original thought before when it came to politics, never deduced anything on my own from first principles and felt confident about it. It took me a while to realize that I don’t need to write down every insight, because I now had a model that could generate them.

(it’s like if I spent 20 years not being able to tell what piece of JS code would throw an error, and now suddenly I could, and I started making a collection if invalid code, only to realize, the insight is not in the collection, it’s in my ability to deduce)

I think the real 🤯 moment came when I realized that you could say I was “conservative/right-wing” at my dayjob. I kept losing arguments like this:

😬 Me: “let’s not implement that proposal, it’s too complex, we won’t be able to maintain that”

🤔 team lead: “why are you anti making things better? wouldn’t it be nice to automate this & that and be able to ship all these beautiful features?”

This was exactly the same pattern I kept seeing in politics over and over again. The conservative position often isn’t “I don’t want [good thing]”, it’s “I am skeptical [good thing] will actually happen, and there’s a risk we will make things worse”.

I am sure this is extremely obvious to anyone who knows anything about politics. But (1) it wasn’t obvious to me, and (2) I figured this out myself, and it was validating when I saw others had independently reached the same conclusion.

Now I was hooked. If I could figure this out on my own, what else could I discover?

part III

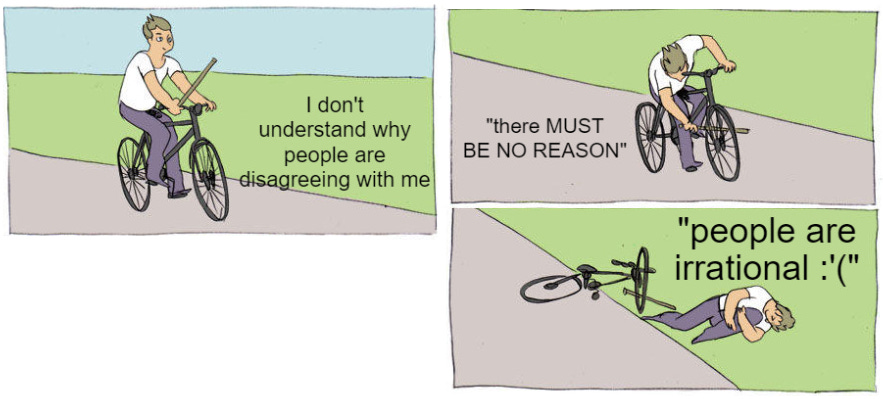

After politics, I applied this to people. I used to have this view: if I am saying something that is clearly true and well supported, but they are still disagreeing with me, they must be dumb/irrational.

This was basically me:

I replaced it with: can I predict what their reaction will be to what I say? why would a reasonable person disagree with my position? if I am so smart, and they are so dumb, why can’t I use my intellect to understand what they’re stuck on and convince them?

People were my new puzzle. Every time someone said something that I thought was crazy/irrational, I got excited: it was a new clue about a world view I can’t (yet) predict!

I started to realize that there was ALWAYS a reason why people were acting in ways that were confusing to me. When they did something “confusing”, it was because I had a specific mental model of what their incentives/desires are, and I never updated that model when I got new information.

Around this time I started watching Succession and became obsessed with it. I started seeing the dynamics described in the show everywhere. In politics & society (with money, status, and power), and inside my own organization (with the way the executives talk to employees, with the way my manager’s relationship worked with his manager). It blew my mind and I ran around telling all of my friends: “guys, Succession, it’s bizarre, it’s like a fiction, but it’s true?? it’s all true? it’s everywhere, it explains everything”

It was absolutely the best & most profound TV show I’d ever seen. Then I watched another one (Marvelous Mrs Maisel) and felt the same way. But hold on! That’s supicious, how did I happen to stumble on the two best TV shows ever, what’s going on here? (it was me who was changing, not TV).

One thing Mrs Maisel gave me was a new frame for how to understand my parents. It was a new predictive model for why they were not supportive of my pursuits, why their love was so suffociating, why they didn’t understand me. As always, I didn’t take this as truth, I took it as a model and tested it. I was able to use it to improve my relationship to my parents. It turns out, they weren’t trying to control me, not really, they were just scared. I could approach them on my own terms, and they were grateful to just be close.

It worked. I confirmed that the TV show, the fiction, had verifiable truth in it.

I couldn’t believe I spent literal decades confused about people’s behaviors, and it only now occurred to me that I could get better at it. I had received SO many clues and data points my entire life, but my model. Never. Updated. I watched TV and film with new eyes now. Every TV show was now a puzzle, the question was: why are the characters doing this? what will they do next? (I would literally pause and try to make this prediction and then keep going).

My enjoyment of film skyrocketed, and I became obsessed with what truths I could extract. For the first time in my life, I had strong opinions about what a character “should” have done. I saw myself and the director on equal footing: both of us are looking out into the world and trying to capture a predictive model of people. The TV show’s model may be accurate in the director’s world, but I lived in a different subset of society/social circles, and I could objectively disagree with the protrayal, or even improve it, for my own context. Objective. Empirical. Nothing wishy-washy about it.

part IV

Over the few months when this was happening, I became obsessed with thinking. Literally just thinking and writing. I tweeted 8000 times over 5 months. It’s because I kept getting more and more of these epiphanies, non-stop.

The best way I can describe this experience is that it felt very similar to when I was first learning to program, and I got to a point where I realized: every piece of software, anything that runs on a computer, from video games, to Microsoft Word, to YouTube & the internet, it could all be boiled down to a sequence of variables & if statements. There was no magic in the system.

This didn’t mean that I knew how to build these systems, just that I could know, given enough time & support. Now I felt the same way…but for everything. I didn’t know the limits of this framework, what couldn’t I figure out?

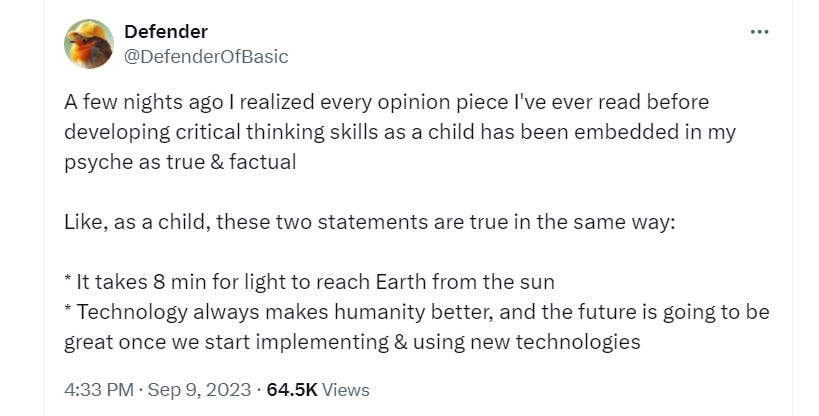

I was re-examining everything that I had read and accepted before developing this robust framework for testing truth. It felt like dismantling an entire building and putting it back together, brick by brick, on new solid ground.

I felt like I had been asleep my entire life and had just woken up.

part V

Even things that were traditionally outside of the realm of the empirical seemed like they could be cleaved by this framework. I started thinking about woo-woo spiritual things and treating them in the exact same way: if it is true, I should be able to make predictions with it. If it cannot make predictions, then I will dismiss it.

I expected to deduce for myself that it was all bullshit (I was slightly worried I’d end up believing something crazy, but I had to trust the process, I couldn’t NOT read it just because others said it was bullshit). Neither this nor that: I ended up finding some useful things, and a lot of not useful things. Successful discernment!

The most useful thing was buddhism practices/meditation. “just sit down in silence” never did anything for me. I stopped trying to follow advice. I just started reading a lot about neuroscience and theories about what conciousness is, and did my own experiments. I noticed something weird: thoughts had a kind of “causal” effect. Certain thoughts triggered concrete changes in my emotions (which then pushed my thoughts in certain directions).

I began to notice ways in which my perception was concretely altered by my emotional state. I saw, for example, how often I misread the tone of a slack message when I re-read it later in a different emotional state. I saw concrete evidence that a lot of the things I thought I was perceiving directly were interpretations (and how non-trivial it was to separate interpretation from reality).

I intentionally avoided reading anything official about buddhism or meditation once I noticed this. I wanted to do this from scratch. I’d tweet about what I was discovering and people would say, “oh yeah, that’s called ABC in buddhism!” and I’d be like, “fuck yeah, look at me deducing spirituality from first principles”

Even the hand-wavy field of “personal advice & self help” seemed within my grasp. The most interesting question for me was, how can so many successful people give conflicting advice, and each swear by it? Is there any point in listening to anybody? My answer is: yes! I just have to understand (1) why did it work for them (2) if I tried it, why did it not work for me? (3) am I stuck in ways they are not, or vice versa?

The advice “make art for yourself!” never worked for me, I ended up just creating things that weren’t very good, and I didn’t enjoy myself. I used to think that this is just the way things are, different advice works for different people, whatchu gonna do. I don’t believe that anymore. I ended up finding the root cause of why I couldn’t create for myself, and now I really enjoy it (basically, I didn’t understand that making art for yourself meant creating for an audience of size 1, not size 0, here’s a tweet thread about it).

I now have this somewhat radical belief that we’re not that different, fundamentally. Even for things that seem completely subjective and “to each their own” (like one person loving instrumental music and one person hating it), there is often a root cause for this difference that we can understand.

The dangers of thinking for yourself

Hinton mentions this briefly, but thinking for yourself is not a 100% positive thing:

So I think having a strong view of the world and trying to manipulate incoming facts to fit in with your view, obviously it can lead you into deep religious belief and fatal flaws and so on

Democracy is another example of something we talk about as a completley positive thing. But if we are being honest, we can think of specific scenarios where thinking for yourself (or democracy) is not a good strategy, where it would lead to a bad outcome in that specific case (what happens when the majority votes for their own demise? if you had veto power, and 100% knowledge, would you use it? I only realized this after reading “Three Body Problem”)

The book Algorithms to Live By (pg 252) has an answer to this:

If you're the kind of person who always does what you think is right, no matter how crazy others think it is, take heart. The bad news is that you will be wrong more often than the herd followers. The good news is that sticking to your convictions creates a positive externality, letting people make accurate inferences from your behavior. There may come a time when you will save the entire herd from disaster.

This is a critical truth for me because it reminds me NOT to make fun or look down upon people who believe things without solid understanding/thinking through it: they are following a rational (possibly superior) strategy for survival.

This way of life is not for everyone, but the good news is: this is not a binary thing. I lived a good life following the herd on everything except writing code, and that made me very valuable as a software engineer. In my organization, *I* was the person who called out things everyone thought were fine (their reasoning was: everyone else thought they were fine) and that repeatedly saved the company tons of time & money.

What I’m trying to say is: I don’t need to be skeptical of every single thing I encounter, I don’t have the time or energy for that (and I also do want to survive).

The important thing is: I know that I can find the truth about anything, even if I can’t find the truth about everything.

I am the one who makes this decision now:

If the risk is too high, I follow the herd

If the risk is low, and the upside of finding an unconventional truth is high (like starting a company, or finding a critical bug/design flaw and telling my coworkers), then I will follow my own path

This, I think, is the shift absolutely everyone can make. Everyone can have this choice. Our world would absolutely be better if everyone was running around feeling that if they really cared about finding the truth about any particular issue, that they could.

In a cosmic coincidence, right after publishing this I heard Steve Levitt talk on his latest podcast episode about "his model of how the work works" and how he tries to predict research results before he reads them!

> I’ve got a model in my head of how the world works — a broad framework for making sense of the world around me. [...] I have a habit of asking myself, “Given my model of the world, what results would I expect the study to generate?” Usually I’m pretty good at guessing what the researchers actually find. But with Ellen Langer, over and over and over, she gets results that I would never predict

https://freakonomics.com/podcast/pay-attention-your-body-will-thank-you/

(so, that's an example of the validation I keep finding: when I independently find something that someone else says is also useful for them, that's a good signal)

Congratulations. You're a scientist.

I'm not being condescending. I think re-examining preconceptions whenever you get new information is the hallmark of maturity. Just remember that information can have bias and deception, depending on where it comes from.

But it sounds like you have a good methodology. I would recommend, however, that you do talk to subject matter experts at some point in the process. A lot of what's out there can misrepresent, for example, what a religion actually teaches. And there are lots of things in medicine and physics that previous work has already disproven that is still widely believed based on being plausible. Consider it just another data point, but one worth considering.