Blind Spot Light vs Rear View Camera

Some cars have a “blind spot light”. It tells you if there is a car there or not.

Some cars have a “blind spot camera”. It shows you what is there.

The first one solves the problem for you (decides whether it is safe to turn or not). The second one does NOT decide for you - it surfaces information & you must decide.

Fact checking systems are like the blind spot light (they “solve”).

Community Notes are like the rear view camera (they “surface”).

In a hostile information environment, you want surface, NOT solve.

In “Open Memetics Research of Mine” Shadow Rebbe describes the “Gorgias Problem” as a gap between appearance & reality:

It takes less energy to make something seem than it does to make it really be

I think the switch from “blind spot sensor” to “rear view camera” is one solution to this problem. The light on the mirror is a proxy signal that answers a specific question. The camera is not a proxy signal, it is a source of data.

I don’t trust any signal that fails this check:

If it stopped working, would I notice?

If the blind spot light stops working, you might think it was safe to turn.

If your fact checker made an error, you might update your world model with the error.

Reliance on these kinds of signals I think is worse than not having a signal at all. If I know that I do not know (whether there is a car there), I am forced to manually turn my head, or be more careful as I turn.

I think this concept is a missing prereq for a lot of people, one that is necessary for doing open memetics.

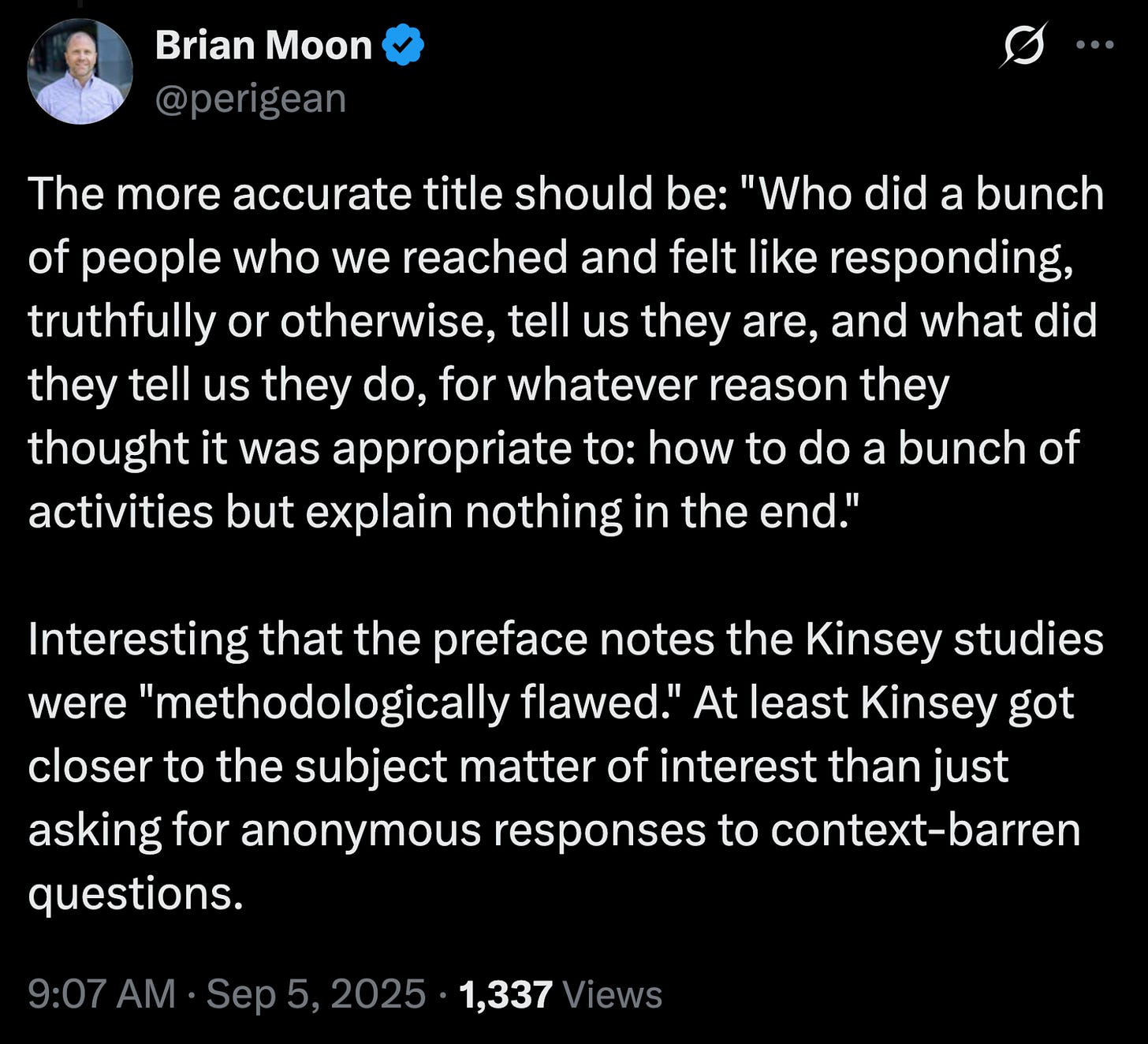

For example, I consider Blaise Aguera’s “Who Are We Now” to be a foundational text for me in how to do open memetics. A lot of smart people dismiss it completely because they think you can’t extract any truth from subjective, self reported surveys:

Brian is dismissing it because he thinks of the surveys as a “blind spot light”. They tell you the answer, and you have no way to know whether it’s true or not.

But that’s not how Blaise uses the surveys. You can in fact extract truth from anonymous surveys. It’s very simple: imagine instead of asking a human “do you see a car here?” you ask “describe what you see”.

Instead of asking “do you trust science? yes/no” → ask “who do you trust?”

You MOVE the decision making from the source, to you.

To check if you understood this concept, here is a simple homework question:

You want to design a survey that tells you what part of the cultural landscape someone is in

Asking them “are you part of this online community? yes/no” is bad, because there’s no way for you to know if they are telling the truth

What would you ask them to get an unfakeable signal?

You can find the answer key here: https://github.com/DefenderOfBasic/DefenderOfBasic/issues/1

Requesting review on this post from: Shadow Rebbe, Lincoln Sayger , The Meme Lab, Yassine khayati, Rajeev Ram

Nice!

> Instead of asking “do you trust science? yes/no” → ask “who do you trust?”

> You MOVE the decision making from the source, to you.

Ask qualitative, description-gathering questions ("what sort of posts did you see today, and what did you think about them"), not ones that have already encoded a world model ("what communities are you a part of").

Not only does the latter expect the person to self-editorialize, it assumes well-drawn boundaries that may or may not actually be there.

Some cars have a button that automatically parallel parks using sensors, while others have 360 cameras that allow you to see everything around you while *you* parallel park.

I've often thought about this analogy - I want technology that *augments* my own abilities rather than simply giving away my agency to the technology. Like AI that augments my musical understanding so I can make my own music better, rather than making the music for me.